By Iris Yim

Note: The article is based on the presentations from the China International Advertising Festival and AdAsia 2025 in Beijing on Oct. 24 – 26, 2025.

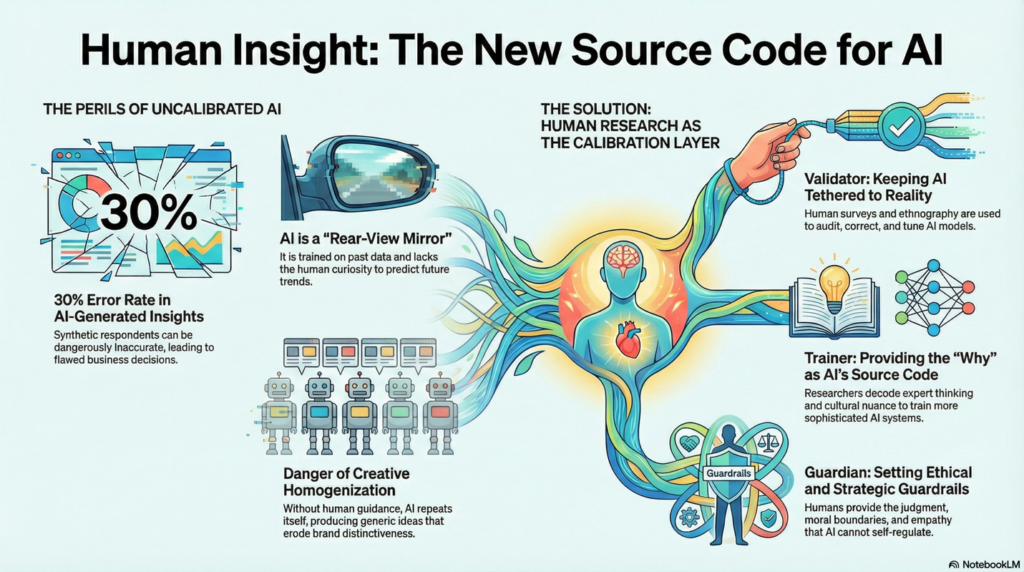

The message coming out of AdAsia 2025 and the China International Advertising Festival was unequivocal: AI has transformed how research is conducted, but it has not replaced human insight. Instead, it has redefined the role of human researchers—from data collectors to architects, calibrators, and custodians of cultural meaning.

Across presentations, one theme dominated: human research is now the “ground truth,” the validation engine, and the cultural decoder that keeps AI purposeful, accurate, and ethical in an era increasingly driven by synthetic respondents, automated content, and platform-owned data ecosystems.

Human Research as Validator and Source Code

The most forward-thinking organizations treat human research as the strategic foundation upon which AI systems rely.

Validation of Synthetic Data

The Future Creative Network (FCN) demonstrated the limits of AI personas. Their synthetic respondents achieved only 70% accuracy—a margin of error far too risky for business decisions. Their solution is now a hybrid workflow:

- AI for scale and speed,

- Human surveys every quarter to tune, recalibrate, and correct the model’s logic.

In other words, human insight is the auditing layer that keeps synthetic data tethered to reality.

Human Thinking as Training Data

Dentsu’s approach pushes this further. They determined that AI can mimic but cannot ideate. So they turned qualitative research inward—conducting deep interviews with creators, decoding how directors and writers actually think, and breaking down the invisible logic behind each decision. This “thinking model” becomes the AI’s source code. Humans provide the why. AI provides the variations.

Capturing Emotion and Nuance

Platforms like Xiaohongshu (Rednote) rely on uncovering human motivations behind spontaneous posts—finding emotional territories like “companionship,” “ritual,” or “comfort” that traffic metrics alone cannot reveal. AI analyzes patterns; humans interpret the meaning behind them.

The Perils of Relying Too Heavily on AI Moderators and Synthetic Respondents

The presentations also highlighted alarming risks when brands over-delegate decision-making to AI.

The Rear-View Mirror Problem

As RACA’s Sergey Efimov noted, AI is built on past data—it “knows everything that has already happened.” But creativity, strategy, and innovation are forward-looking. Only human intelligence carries curiosity, imagination, and the ability to spot future cultural shifts.

Hallucination and Overconfidence

A 30% error rate in AI-driven respondents is not a minor miscalculation; it is a strategic liability. If AI is generating confident answers based on flawed logic, brands risk building campaigns on fiction.

Bias and Cultural Mismatch

AI often fails at cultural specificity—particularly in global campaigns. An AI trained on general data may misinterpret Indian, Indonesian, Philippine, or Middle Eastern contexts. Synthetic insights become dangerously misleading without human cultural intelligence.

Homogenization

Without human correction, AI repeats itself, producing identical faces, similar storylines, and “safe” ideas. Human creativity is required to protect brand distinctiveness.

Structural Shortfalls in Platform-Based Research

Major platforms like Tencent and Huawei showcased powerful tools, but they also revealed systemic barriers that require human interpretation.

Data Black Boxes

Platforms control attribution data, leaving brands dependent on modeled predictions rather than observable truth. This limits strategic clarity and increases risk.

Data Fragmentation

The promise of a unified consumer identity remains unfulfilled. JD.com data, CRM systems, offline behavior, and media exposure still live in disconnected silos.

Traffic vs. Value Misalignment

Platforms optimize for stickiness; brands optimize for meaning and conversion. High traffic often masks low value—contributing to the industry estimate that 36% of ad spend is wasted.

Organizational Silos

When creative, CX, and performance teams use different KPIs, the insight chain breaks. Human researchers play a key role in stitching the story back together.

Where Qualitative Research Fits In: The Critical “Calibration Layer”

Qualitative research has evolved dramatically. It is no longer a standalone phase—it is now embedded throughout the AI lifecycle as the calibration layer that ensures human logic, cultural nuance, and strategic accuracy.

Ground Truth for Reality Checking

Qualitative interviews and ethnography provide the human benchmarks against which AI outputs are evaluated.

This is what keeps synthetic personas honest.

Extracting Tacit Knowledge to Train AI

Rather than interviewing consumers alone, researchers now analyze creative leaders, strategists, and experts to extract:

- decision rules

- creative heuristics

- emotional logic

- cultural frameworks

This becomes the training data for “synthetic experts” that pass institutional wisdom forward.

Netnography: Mining Spontaneous Human Expression

On platforms like Xiaohongshu, TikTok, and Weibo, qualitative teams study:

- organic conversations

- user-generated pain points

- emergent behaviors

- micro-tribes

These insights power innovation more effectively than high-level traffic dashboards.

The Haier “three-barrel washing machine” case proved this: a single user’s comment about hygiene when washing socks and underwear together drove a national product innovation.

Cultural Strategy and Future Insight

AI looks backward; qualitative research looks forward. It defines the values, rituals, tensions, and belief systems that brands can meaningfully align with.

Human-Centered Design

Technology is no longer launched first and rationalized later. Qualitative research begins with understanding:

- human needs

- employee pain points

- customer frustrations

AI solutions are then built around these needs.

The New Look of Human Research

Across all discussions, the future role of human research crystallized into three core functions:

Mentorship

Senior researchers now “mentor” AI—providing judgment, interpretation, and moral boundaries. They ensure every AI output aligns with brand purpose and cultural relevance.

Guardrails

Humans create trust frameworks to monitor:

- accuracy

- tone

- ethical boundaries

- privacy

- unintended bias

AI cannot self-regulate; it must be governed.

Empathy Injection

AI can simulate conversation but cannot determine meaning or intent.

Humans define the purpose of each interaction—especially in emotionally sensitive settings like healthcare or education.

As one speaker summarized: “Technology will not replace humans. But humans who use technology will replace those who don’t.”

Human Intelligence Is the Operating System of AI-Driven Research

AI now carries the weight of data processing. But humanity provides:

- the cultural context

- the emotional logic

- the curiosity

- the vision

- the ethical compass

- the future orientation

Qualitative research is no longer optional—it is the strategic backbone of AI-powered insight generation.

In this new world, humans don’t compete with machines. Humans teach, calibrate, and guide the machines—so brands can stay distinct, relevant, and human in an increasingly automated landscape.